Mistral winds freeze European ambitions

The unexpected partnership between French AI startup Mistral and Microsoft. The EU kicks out Amazon lobbyists. Sora and the age of verification.

Digital Conflicts is a bi-weekly briefing on the intersections of digital culture, AI, cybersecurity, digital rights, data privacy, and tech policy with a European focus.

Brought to you with journalistic integrity by Guerre di Rete, in partnership with the University of Bologna's Centre for Digital Ethics.

New to Digital Conflicts? Subscribe for free to receive it by email every two weeks.

N.4 - March 4, 2024

Authors: Carola Frediani and Andrea Daniele Signorelli

Index:

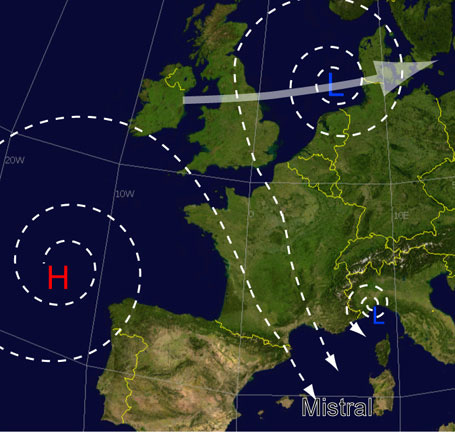

Freezing Mistral

Amazon and the EU

The Sora Effect

In Brief

AI

Freezing Mistral

This week we have to talk about Mistral: a French startup founded in Paris less than a year ago – in April 2023 – which raised 500 million euros in its first 8 months, with a valuation of around $2 billion last December. Mistral is the second European AI startup to raise such a substantial amount of funds, after the German Aleph Alpha.

The founders come from DeepMind (CEO Arthur Mensch) and Meta (Guillaume Lample and Timothée Lacroix). Important investors come from Silicon Valley. But Mistral's founders (co-founding advisors) also include Cédric O, the former French digital minister, who has excellent relations with President Macron. "When a draft of the EU’s AI Act last year threatened to force Mistral to divulge its data recipe, Cédric O co-ordinated, with Mr Macron’s backing, a Franco-German effort to oppose such provisions. These were duly excised from the bill", writes The Economist.

This techno-political and Franco-American mix partly explains why, although Mistral presents itself as a champion of open-source AI (as opposed to the increasingly closed and proprietary approach of OpenAI) and of an emerging European tech industry, the agreement with Microsoft is the chronicle of an untold (and, as we shall see, controversial) partnership, but not an unpredictable one.

On February 26, Microsoft and Mistral announced a "multi-year partnership" that will see the startup make its language models available on Microsoft's Azure AI platform. It is the second company to do so after OpenAI. In addition, the two companies will explore collaboration around training purpose-specific models for selected customers, including European public sector workloads.

As part of the deal, Microsoft said it would invest in Mistral, although financial details were not disclosed, reports the Financial Times. However, Bloomberg mentions an investment of €15 million, which will be converted into shares in the startup's next funding round.

Microsoft has already invested around $13 billion in OpenAI, an investment that is being scrutinised by competition authorities in the US, EU and UK. The European Commission has said that regulators will analyse Microsoft's investment in Mistral AI and, according to Bloomberg, the move could lead to a formal investigation. "The Commission is looking into agreements that have been concluded between large digital market players and generative AI developers and providers", European Commission spokeswoman Lea Zuber told Politico.

But the issue is more complex than it first appears. In the European Parliament, some politicians are described by Euronews as "furious", particularly because the European regulation on artificial intelligence (AI Act) has been partially amended to accommodate the demands of companies such as Mistral, which represented the interests of both open source models and European industry.

"On a technical level and a political level, in the [European] Parliament we are extremely furious because the French government for months was making this argument of European leadership, meaning that those companies should be able to scale up without help from Chinese or US companies", said Kai Zenner, head of office and digital policy adviser for Axel Voss, MEP for the European People’s Party (EPP). "They were always blaming the Parliament that we are making it kind of impossible, for those national champions, unicorns to try to compete with their global competitors", he told Euronews. Mistral AI – Zenner added – had argued that if its demands were not met, it would be forced to work with companies such as Microsoft. "Now they have obtained all their requests, and they are still doing it, and I just find it ridiculous".

Given the timeframe required to finalise such an agreement, and the fact that the trilogue (the political negotiations between European authorities) was still ongoing in December, it is understandable that some politicians feel betrayed.

"This is a mind-blowing announcement", tweeted journalist Luca Bertuzzi, who closely followed the negotiations on the AI Act. "Mistral AI, the French company that has been fighting tooth and nail to water down the AI Act's foundation model rules, is partnering up with Microsoft. So much for 'give us a fighting chance against Big Tech’”.

“The first question that comes to mind is: was this deal in the making while the AI Act was being negotiated? That would mean Mistral discussed selling a minority stake to Microsoft while playing the 'European champion' card with the EU and French institutions. (...) The other question is how much the French government knew about this upcoming partnership with Microsoft. It seems unlikely Paris was kept completely in the dark, but cosying up with Big Tech does not really sit well with France's strive for 'strategic autonomy'", Bertuzzi concludes.

The French government, however, rejects the allegations. The Secretary of State for Digital Affairs, Marina Ferrari, said that France was not aware of the discussions between Microsoft and Mistral, according to the French newspaper La Tribune.

As if that weren't enough, almost simultaneously Mistral announced a new language model, Mistral Large. "It’s designed to more closely compete with OpenAI’s GPT-4 model. Unlike some of Mistral’s previous models, it won’t be open source", writes The Verge.

"The decision by Mistral's founders shows that the Americans have already achieved a dominant position in the race for artificial intelligence," writes La Tribune. "It brutally exposes a reality that governments don't want to see, namely that European technological independence remains, at least for the moment, an illusion."

An interesting aspect of Mistral Large, especially for European users, is that, according to the startup itself, it is “natively fluent in English, French, Spanish, German, and Italian, with a nuanced understanding of grammar and cultural context".

Finally, Mistral has also released a new conversational chatbot, Le Chat, based on several of its models.

(I'm going to digress here, perhaps a little pedantic, but I think it's necessary. I have tried Mistral, and it writes well in Italian, but like other LLMs, it is subject to hallucinations. When I asked for information about a famous historical monument in Genoa, not only did it confuse the details, it also provided a non-existent link to a non-existent municipality. A non-local person would have had a hard time figuring out that it was a hallucination. This is a warning, not specifically about Mistral, but to emphasise that this is how language models behave. If I were a teacher, I would take the answers it gave me back to school and ask the class to identify all the hallucinated information and explain how they reconstructed the correct information).

AMAZON AND THE EU

The European Parliament kicks out Amazon lobbyists

Amazon lobbyists have been banned from the European Parliament until further notice after an internal body unanimously decided on 27 February to strip them of their badges, reports EUObserver. The ban follows a request from the parliament's employment committee, where MEPs decided to take action against the US company for failing to cooperate with them on various occasions since 2021.

The petition was supported by 30 trade unions and civil society organisations who, in a letter dated 12 February, described Amazon's lack of cooperation as a "willful obstruction of the democratic scrutiny of the company’s activities".

According to the letter, Amazon has repeatedly failed to attend hearings on working conditions in its warehouses and cancelled visits by European parliamentarians to its warehouses in Germany and Poland. The company, the letter continues, has also failed to declare its links with various think tanks and has declared a lobbying budget “seemingly too low”.

This is only the second time in the history of the European Parliament that a company has been banned from lobbying. The first time was for Monsanto in 2017, also for failing to attend hearings. Such decisions are provided for in the EU Parliament's rules, which state that a badge can be withdrawn if a party "has refused, without offering a sufficient justification, to comply with a formal summons to attend a hearing or committee meeting or to cooperate with a committee of inquiry".

THE SORA EFFECT

How to adapt to the wave of increasingly realistic synthetic content

Sam Gregory, executive director of the NGO WITNESS, which uses video to document human rights abuses around the world, conducted an early analysis of how a technology like Sora could affect trust in what we see. Sora is OpenAI's new text-to-video model, which can create highly realistic synthetic videos from text instructions. Although not yet available to the public, OpenAI has released several examples of videos produced using this technology (you can see them here).

Until now, Gregory explains in various social media posts, multiple viewpoints have been a good starting point for assessing whether an event actually occurred and the context in which it took place. For example, in almost all cases of state or police violence, there is a dispute about what happened before/after the camera started recording an action or perceived reaction. On the other hand, a shaky mobile phone shot is a "powerful indicator of emotional credibility", of authenticity.

But now we are faced with photorealistic synthetic videos that can take on different styles, even an amateurish one, or multiple angles, simultaneously creating multiple camera perspectives and angles on the same scene. Sora also has the ability to "add video (essentially outpainting for videos) back and forth in time from an existing frame". [Outpainting is a function found in image generators such as DALL-E to expand an image beyond its original boundaries].

"Sora’s most concerning ability from the tech specs", says researcher Eryk Salvaggio, "is that it can depict multiple scenarios that conclude at a given image. That is gonna be a disinfo topic at a few conferences in the near future, I’d guess”. [...] In theory, let’s assume you have a social media video of police that starts from the moment the police start using unwarranted force against a person on the street. This says they can seamlessly create up to 46 seconds of synthetic video that would end where the violence clip starts. What happens in that 46 seconds is guided by your prompt, whether it’s teenager throws hand grenade at smiling policeman or friendly man offers flowers to angry police".

In a sense, Gregory adds, "realistic videos of fictitious events align well with existing patterns of sharing shallowfake videos and images (e.g. mis-contextualized or lightly edited videos transposed from one date or time to another place), where the exact details don't matter as long as they are a convincing enough fit with assumptions. In realistic videos of events that never happened, we'd be missing the ability to search for the referent - i.e. what we do now with shallowfake search, use a reverse image search to find the original, or use Google About this Image".

"As text-to-video and video-to-video etc expand”, concludes Gregory, “we must work out how to reinforce trust and ensure media transparency, deepen detection capabilities, restrict out-of-line-usages and enforce accountability across the AI pipeline".

Media literacy starts with teachers

Related to this discussion is also the importance of media literacy. Specifically, the importance of teaching how to recognize and analyze online content in schools. Giancarlo Fiorella (Bellingcat) discusses this in a podcast, explaining how they developed a curriculum to train teachers in the UK.

How to promote AI awareness among young people

This is another initiative aimed at British students. The Royal Society has organized a series of conferences on the theme "The Truth about Artificial Intelligence." In the video below, Professor Mike Wooldridge explains how learning in AI works and what this technology can do, using a series of entertaining experiments and demonstrations. It's a high-quality science communication video aimed at young people, and highly recommended for everyone.

IN BRIEF

The UK Is GPS-Tagging Thousands of Migrants

"In England and Wales, since 2019, people convicted of knife crime or other violent offenses have been ordered to wear GPS ankle tags upon their release from prison", reports Wired.com. "But requiring anyone facing a deportation order to wear a GPS tag is a more recent and more controversial policy, introduced in 2021. Migrants arriving in small boats on the coast of southern England, with no previous convictions, were also tagged during an 18-month pilot program that ended in December 2023. Between 2022 and 2023, the number of people ordered to wear GPS trackers jumped by 56 percent to more than 4,000 people".

There’s More Than Just Shadowbanning on Instagram

The Markup reviewed hundreds of screenshots and videos from Instagram users who documented behavior that looked like censorship, analyzed metadata from thousands of accounts and posts, spoke with experts, and interviewed 20 users who believed their content had been blocked or demoted. Using the information gathered, The Markup then manually tested Instagram’s automated moderation responses on 82 new accounts and 14 existing accounts.

The investigation found that Instagram heavily demoted nongraphic images of war, deleted captions and hid comments without notification, erratically suppressed hashtags, and denied users the option to appeal when the company removed their comments, including ones about Israel and Palestine, as “spam”.

AI & Environmental Impact

“It remains very hard to get accurate and complete data on environmental impacts. The full planetary costs of generative AI are closely guarded corporate secrets. Figures rely on lab-based studies by researchers such as Emma Strubell and Sasha Luccioni; limited company reports; and data released by local governments. At present, there’s little incentive for companies to change”, writes Kate Crawford (author of Atlas of AI) in Nature.

This article from The Verge tries to give you the numbers, or at least some partial estimates.

Journalism

Julia Angwin (formerly of ProPublica) has launched a new publication, Proof News. It aims to conduct "methodologically precise investigations, build public datasets, collaborate with journalists, researchers, and influencers worldwide, and promote best practices in evidence collection."

In their first investigation, they tested various AI language models to see how accurate electoral information was. Spoiler: it wasn't.

Paper

Hallucination is inevitable - arXiv